3 Oct 2024

Lost in conversation? Uncertainty, ‘value-alignment’ and Large Language Models by Sylvie Delacroix

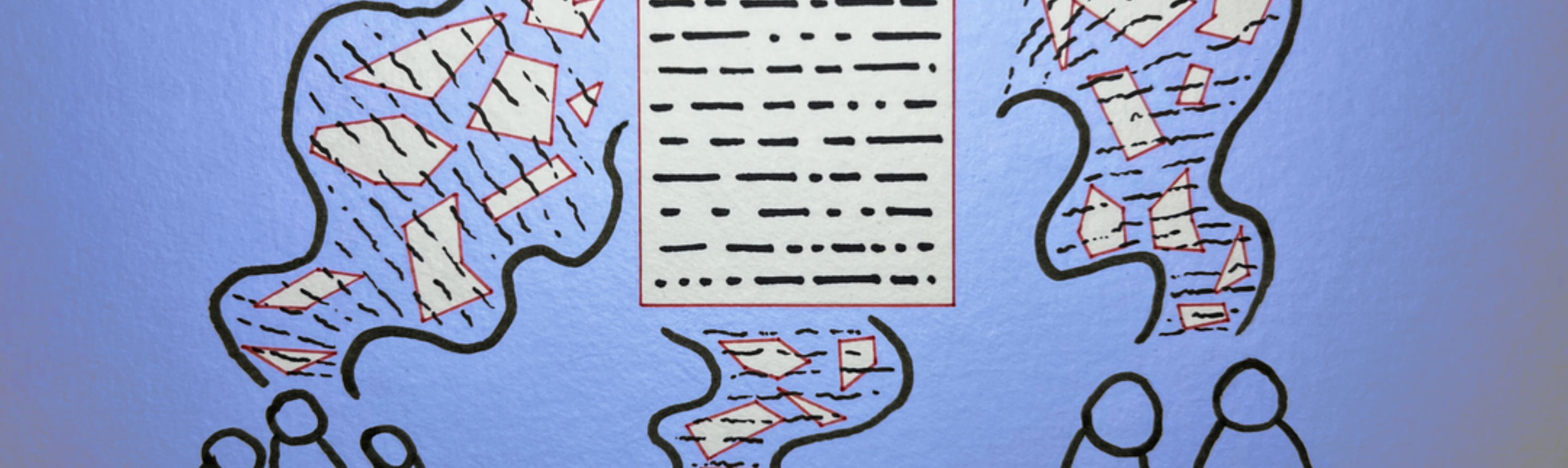

Picture by: Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / CC-BY 4.0

On October 3, Sylvie Delacroix (Inaugural Jeff Price Chair in Digital Law, King’s College London Fellow, Alan Turing Institute) is giving a lecture titled 'Lost in Conversation? Uncertainty, ‘value-alignment’ and Large Language Models.'

Abstract

How could talk of ‘AI alignment’ ever turn out to be bad for us? As long as we are clear about the challenges in the way -think of the huge variety of often clashing values- surely there’s nothing wrong with striving to ‘value-align’ AI tools? Russell warns us against the ‘mistake’ of ‘putting in values’, ‘because getting the values (or preferences) exactly right is so difficult and getting them wrong is potentially catastrophic’ (Russell 2019).

What if the danger is not so much that this alignment endeavour might get the values wrong, but instead that it might end up compromising the very spring these values stem from? Moral values are not mysterious entities we somehow end up caring about. They are the by-product of our daily endeavours to find our way around the world. Just as we might criticise a wavy plank of wood as a bad example of wood shaving, so we might criticise a less-than-loyal friend or overly narrow, stunting education. To speak of education, justice or healthcare as ‘value-loaded practices’ is to acknowledge the extent to which these practices owe their daily renewal to a commitment to keep questioning what counts as health, justice or education. The resulting process of re-articulation does not take place in a vacuum. We converse.

As in-between-spaces that enable a movement of to and fro between principles and emergent, ‘imperfectly rationalised intuitions’, conversations are critical. Their qualitative openness is not only key to the constructive airing of different worldviews. At a more primitive level, they also allow for the gradual refinement of the bundle of interpretive habits and intuitions we rely on to make sense of each other. Hopefully further refined through back-and-forth dialogue, these fore-understandings act as enabling prejudices that both disclose and delimit what can be understood from the interlocutor. What happens when this interlocutor is no longer human? What happens when our ingrained propensity to make sense of the world through conversation intersects with Large Language Models’ (LLMs) novel ability to produce naturalistic and contextually appropriate responses?

The conjunction of these two forces – LLMs’ ability to simulate conversational turn-taking and our drive to engage in ‘sense-making’ conversation- has catalysed a situation where we find ourselves treating these tools as conversational partners. The full ethical significance of this development cannot be grasped from a static or passive understanding of values. If, by contrast, values are considered the by-product of our imagining how the world -or the way we live together- could be different, the normative labour required to commit ourselves to such visions may be one worth bolstering.

The good news is that there are ways of designing LLMs that can do just that. The bad news is that commercial incentives are unlikely to ever push us past the current ‘harmlessness’ paradigm. As an example, the concern to avoid unwarranted epistemic confidence on the part of LLM users is driving research meant to enable LLMs to communicate their outputs with built-in measures of uncertainty. Important as it is, this work overlooks the extent to which signaling uncertainty can also be a way to create conversational space for the productive articulation of diverse viewpoints. This objective leads to different design choices to those meant to encourage further fact-finding.

Today we stand at a cross-roads. LLM interfaces could be made to incentivise the kind of collective participation capable of avoiding our merely standing at the receiving end of LLMs’ impact upon cultural paradigms of value-negotiation. Or we could remain captive of the ‘value-alignment’ lingo, looking forward to the offloading of our chaotic normative labours to ‘enlightened’ AI tools.

About the speaker

Sylvie Delacroix's work focuses on data & machine ethics, ethical agency and the role of habit within moral decisions. She is the Inaugural Jeff Price Chair in Digital Law at King's College London. She is also a fellow at the Alan Turing Institute and a visiting professor at Tohoku University. For recent work on the data ecosystem that's made Generative AI possible, the way it's now put in jeopardy by those very tools and the lack of data empowerment.