October 05, 2023

Foundation models and the privatization of public knowledge

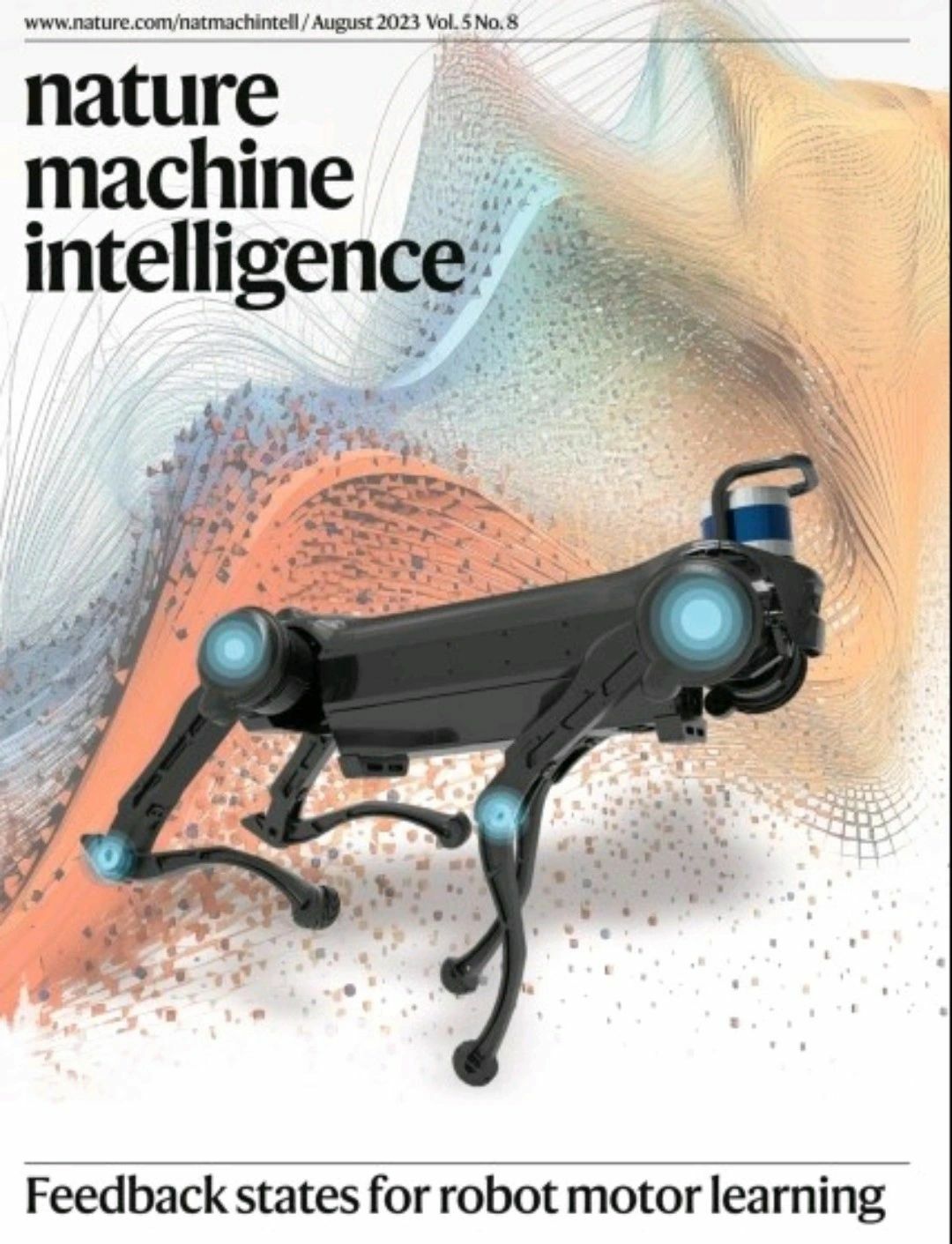

To protect the integrity of knowledge production, the training procedures of foundation models such as GPT-4 need to be made accessible to regulators and researchers. Foundation models must become open and public, and those are not the same thing", writes AlgoSoc PI José van Dijck with researchers Fabian Ferrari and Antal van den Bosch in the September issue of Nature Machine Intelligence.

Their article discusses concerns surrounding ChatGPT, an AI developed by OpenAI. These concerns encompass safety, plagiarism, bias, and accuracy, with a particular focus on how large language models (LLMs) owned by private entities may impact knowledge production. The authors notes that OpenAI, despite its nonprofit origins, has transformed partly into a capped-profit company with Microsoft as a major investor. The issue of whether regulators and scientists will have access to the inner workings of deep neural networks, including their training data and procedures, remains under-addressed. The lack of transparency could hinder thorough inspection, replication, and testing, potentially compromising the integrity of public knowledge.

In their article, Van Dijck et al. also examine the training data and procedures of foundation models like GPT-4 and PaLM. While some information is available about the datasets used, details about their specific composition and sources remain undisclosed. The author highlights that making foundation models open and public is crucial. "Open" refers to making them available for detailed inspection and replication, while "public" implies treating them as utilities accessible to all. Van Dijck et al. suggest that ensuring transparency, implementing technical safeguards, and enacting legal and regulatory measures are essential steps. Moreover, they emphasize the need for improved AI literacy among citizens to foster a democratic understanding of these technologies in the context of knowledge creation.

Cite this article: Ferrari, F., van Dijck, J. & van den Bosch, A. Foundation models and the privatization of public knowledge. Nat Mach Intell 5, 818–820 (2023). https://doi.org/10.1038/s42256...

More results /

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

By Natali Helberger • January 19, 2026

By Roel Dobbe • November 24, 2025

By Roel Dobbe • November 12, 2025

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

By Magdalena Brewczyńska • January 16, 2026

By Sabrina Kutscher • July 02, 2025

By Natali Helberger • March 06, 2025

By Maurits Kaptein • June 06, 2025

By Leonie Westerbeek • November 22, 2024

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

By Agustin Ferrari Braun • January 29, 2026

By Fabio Votta • November 05, 2025

By Ernesto de León • Fabio Votta • Theo Araujo • Claes de Vreese • October 28, 2025