May 17, 2024

Mind the A(I)ge gap? Emerging generational fault lines in public opinion on Artificial Intelligence

Since 2022, there has been a lot of attention to generative artificial intelligence (AI), especially since the release of the ChatGPT chatbot. The ability of this technology to autonomously create human-seeming text, images, and video has generated lots of excitement and concern. It has the potential to transform many aspects of life – from increasing company productivity to supercharging disinformation campaigns. Because of this, it is crucial to understand how the Dutch population feel about these technologies. The AlgoSoc AI Opinion Monitor did exactly this, asking a representative sample of Dutch citizens how they felt towards generative AI. While opinions differed a lot, a clear pattern emerged: how old you are really influences how you use and think about generative AI.

Do only the Young use Generative AI?

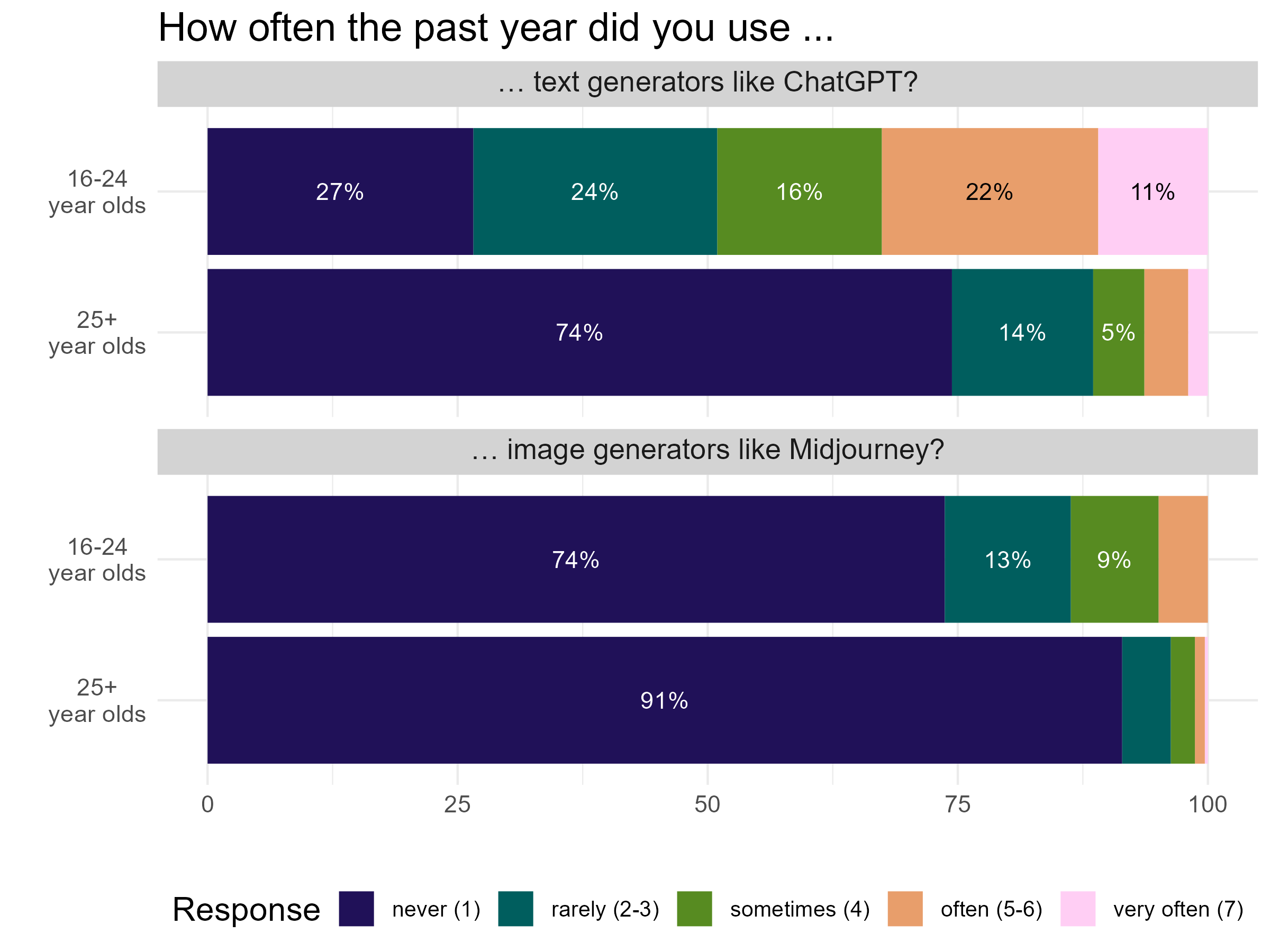

Despite all the talk about how impressive artificial intelligence (AI) is at creating new texts and images, most people in the Netherlands aren't really using it. In fact, the AlgoSoc AI Opinion Monitor found that over 70% of the population had never tried using AI to generate texts, not even when they were asked about the well-known ChatGPT chatbot. And when it comes to making images with AI, even fewer people have tried it—89% never did. This big difference between what people talk about with AI and what they actually use raises some questions. Why aren't more people getting into AI? Maybe they're worried about privacy or ethics, or they just don't see how it could help them.

However, this trend is very different across age groups. Younger people, between 16 and 24, report being a lot more familiar with generative AI. Only about 26% of them say they've never tried text or image generation tools. But for people 25 and older, it's a different story—about 74% have never used it.

Why is there such a big difference between ages? While the data cannot give us a concrete answer, we can speculate. Prof Dr Claes de Vreese, AlgoSoc scientific co-director, believes this has to do with how comfortable younger people are with new technologies: “Younger people are, in general, more technologically literate, with this generation particularly having never lived in a moment when the internet did not exist. As such, they are more self-confident in their ability to experiment and integrate new technologies into their everyday routines.” With younger people still more likely to be in school, it’s also possible they have employed it for one of its most infamous uses: completing school and university assignments.

This age gap might present a challenge to the growing narrative about the potential of AI, and its ability to increase productivity in the working-age population. With such a low uptake in the older population, it might be that promises about increased productivity might take longer to materialise. The issue may extend beyond mere technological proficiency. It is possible, that older generations harbour more reservations about AI while younger cohorts embrace it with greater enthusiasm. Exploring these generational dynamics could provide valuable insights into the evolving relationship between society and technology. The AI Opinion Dashboard presents attitudes towards AI by age groups, suggesting this might be the case.

Encountering AI-Generated Content

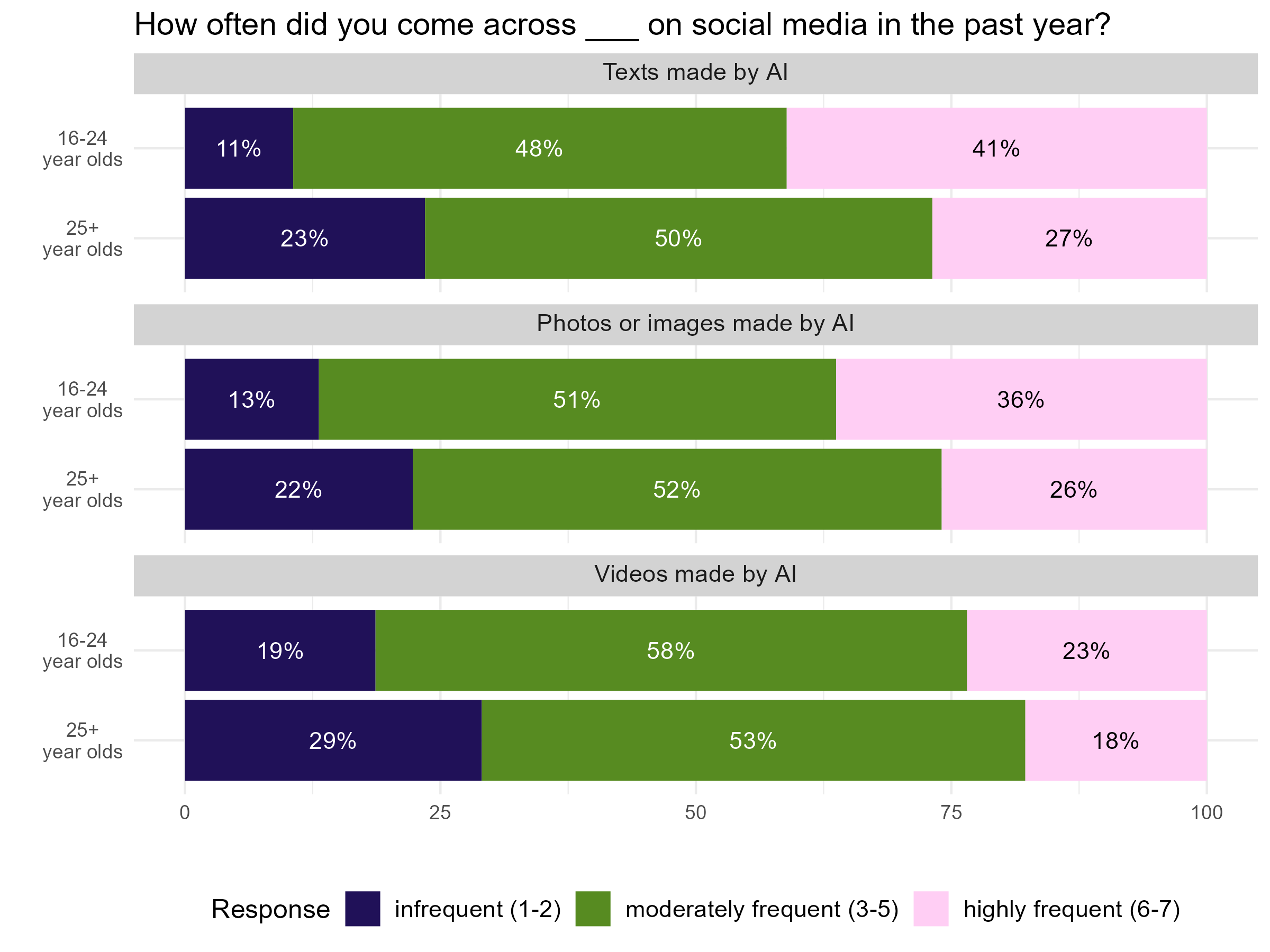

While most people in the Netherlands aren't using generative AI tools, they believe that others are. The AlgoSoc AI Opinion monitor asked participants how often they believe they come across AI-generated texts, images, and videos on social media. Of those who answered, only 15% said they had never encountered AI-generated text on social media (with 7% saying infrequently). Almost half (49%) reported they saw AI-generated texts moderately frequently, and 28% said they saw this content highly frequently. Interestingly, encounters with AI-generated images are higher than that of text. This is likely due to the slower development of AI image-generation systems, which still have a glossy aspect, and make some obvious mistakes, such as giving people extra fingers. While AI-generated text is very hard to distinguish from human-created text, identifying AI images might be easier.

Younger people say they see AI-generated content more frequently. 41% of those between 16 and 24 years old say they see AI-generated text on social media highly frequently, compared to 27% of those 25 and older.

However, it is impossible to tell whether this is because older participants indeed see less of this content, whether it is that they spend less time on social media, or rather because they are unable to detect exposure when it happens, therefore underestimating the quantity of AI they are encountering online. Conversely, it might be that more use of these technologies means people think they see this content more often. Prof Dr Theo Araujo, senior researcher at the AlgoSoc consortium, argues that "It might as well be that the more people use these technologies, the more they become able to detect it in content - and also to assume that others are using it as well".

“Disinformation campaigns try to alter the information ecosystem, to the point where we question everything around us. If we have little trust in others’ ability to spot AI-generated content, we likely believe they are more susceptible to disinformation campaigns, which is bad news for democracy.” — Prof Dr Claes de Vreese

Confidence in Recognizing AI

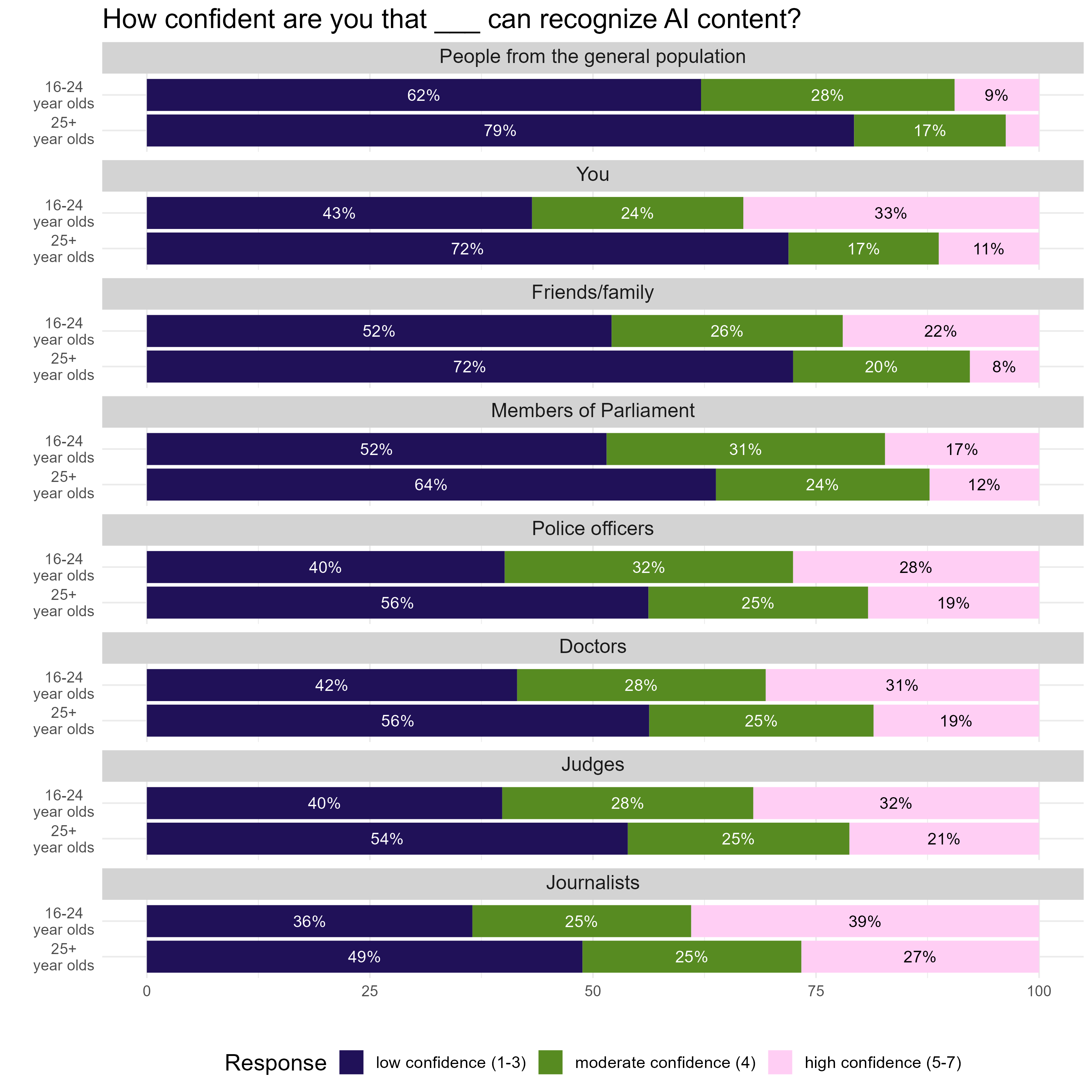

This brings us to our next finding: citizens’ perceptions of how capable they believed themselves to be at discerning whether content is AI-generated. Overall, they don't feel very confident about it, with 68% of those interviewed reported having low to no confidence in their own ability to spot AI generated texts. They feel a bit more confident than they do about the general population's ability to spot AI-generated content, but not by much. 76% reported having low to no confidence in the general populations’ ability to identify AI generated texts.

This kind of thinking, where we think others are more easily fooled than we are, is called the 'third-person effect'. It's not great news, especially when we think about how disinformation campaigns online try to make us doubt everything we see. “Disinformation campaigns try to alter the information ecosystem, to the point where we question everything around us. If we have little trust in others’ ability to spot AI-generated content, we likely believe they are more susceptible to disinformation campaigns, which is bad news for democracy” says Prof Dr de Vreese.

People in the Netherlands also don't have much trust in key societal figures like journalists, judges, doctors, police officers, and members of parliament to spot AI-generated content. On average, only 44% of the population has somewhat to high confidence that these figures can identify AI generated content. 56% have low to no confidence that they can.

The group that was most trusted to recognize AI-generated content was journalists. 51% of participants reported having somewhat to high trust in their abilities to detect AI-generated content. This is quite a lot higher than, for example, parliamentarians, where only 36.6% of the population has high trust in these elected officials to detect this type of content. "While this is good news when thinking about the importance of journalism to inform the public debate, it also highlights how important it is for journalists to have sufficient resources to be able to take on this role", says Prof Dr Araujo.

There's also a difference in how confident younger and older people are in their own and others’ abilities to spot AI-generated content. The biggest difference is in how confident they are in their own abilities to spot AI content— younger people are more than twice as confident as older people. 55% of those aged 16-24 reported feeling somewhat to highly confident in their abilities to detect AI. Only 27% of those aged 25 and older feel this way. While this difference is less pronounced for other groups , it still exists. For example, journalists enjoy mid to high confidence by both a large portion of the youth (62%), and a large portion of older participants (50%).

Keeping an Eye on the (AI)ge Gap

There is evidence from other new technologies - such as social media - that the differences between older and younger people do become smaller. Will this age gap in the use and attitudes of generative AI follow this pattern? Or is the low usage and negative opinions about AI a symptom of a deeper distrust towards these systems? AlgoSoc hopes to provide some answers to this over the coming years. By surveying the Dutch population about these questions until 2027, this project will provide in-depth, over-time answers to these questions.

If you want to explore the age gap across other AI attitudes and uses, check out our interactive AI Opinion Monitor!

To cite the data, please use: de León, E., Wan, J., Votta, F., van der Haak, D., Oberski, D., Taylor, L., Araujo, T., van Weert, J. C. M., Bex, F., van Dijck, J., Gürses, S., Buijzen, M., Prins, C., Helberger, N., & de Vreese, C. (2024). Public Values in the Algorithmic Society Longitudinal Panel Survey. University of Amsterdam. https://doi.org/10.21942/uva.25860655

More results /

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

By Natali Helberger • January 19, 2026

By Roel Dobbe • November 24, 2025

By Roel Dobbe • November 12, 2025

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

By Magdalena Brewczyńska • January 16, 2026

By Sabrina Kutscher • July 02, 2025

By Natali Helberger • March 06, 2025

By Maurits Kaptein • June 06, 2025

By Leonie Westerbeek • November 22, 2024

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

By Agustin Ferrari Braun • January 29, 2026

By Fabio Votta • November 05, 2025

By Ernesto de León • Fabio Votta • Theo Araujo • Claes de Vreese • October 28, 2025