April 03, 2024

The EU AI Act and its Implications for the Media Sector

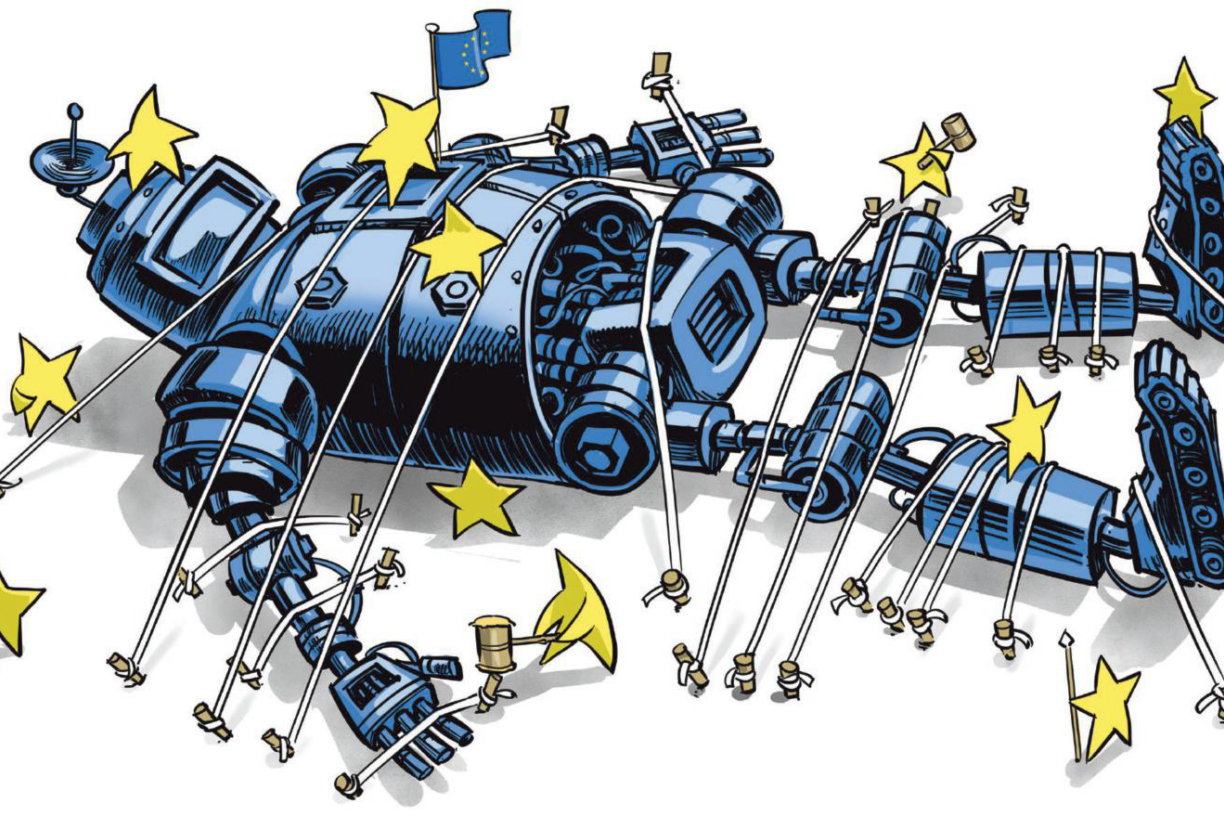

As the first comprehensive law on artificial intelligence, all eyes are currently on the European Union’s AI Act. After an agreement was reached in December 2023, the finalized version was greatly anticipated. The adoption of the AI Act is rightfully hailed as a landmark occasion in tech governance. As the world’s first comprehensive framework on AI, the AI Act is likely to set the example for future legislative initiatives that aim to regulate general-purpose AI models and generative AI applications across the world.

Together with the AI, Media & Democracy Lab, AlgoSoc hosted a panel discussion on the AI Act’s implications on the media ecosystem. Experts from academia and the industry came together to discuss topics such as the AI Act’s risk-based approach to media, its impact on journalistic practices, copyright concerns, and user safety. We brought together academic and industry panelists prof. Natali Helberger, dr. Sophie Morosoli, prof. Nicholas Diakopoulos, and Agnes Stenbom in a discussion, moderated by dr. Stanislaw Piasecki & dr. Laurens Naudts.

Media and The Risk-Based Classification

Prof. Natali Helberger kicked off the discussion with a brief presentation covering the relevant points of the most recent AI Act draft. She drew attention to two key matters, those affecting the media industry in the role of users of AI on one hand, and as producers of AI on the other.

The AI Act works with a broad definition of AI, grouping various technologies like recommender systems, automated translations, and generative AI under the same umbrella. These technologies are ranked and categorised from low-risk to high-risk, which will then determine the level of regulation applicable to them. While high-risk subjects will be heavily regulated, those categorized as low-risk are not.

Media is currently not categorized as high-risk, which means more extreme regulations will not apply to developers of media AI systems. The most relevant revision for them to consider is the obligation of technical documentation and transparency about the models they are developing. Concretely, this transparency applies to the model itself, but also the training data going into it, the testing processes, and their results.

As users and deployers of AI technology, it is essentially up to media organizations to select responsible AI technologies. Furthermore, transparency obligations on the use of AI in content creation will most likely not apply to editorial media content.

Helberger concluded that the AI Act generally offers fewer legal guarantees and fewer legal requirements for media organizations, which is why it is important to ensure standards for technical documentation and taking specific information needs into consideration. She suggests working toward creating room for discussions on industry growth for providers of journalistic AI together with the European Commission and the AI Office.

Citizen’s Perspectives on AI and Transparency

Picking up on the aspect of transparency, Sophie Morosoli elaborated on Article 52 of the AI Act which addresses it specifically. She pointed to her survey conducted on Dutch citizen’s attitude on AI and the use of genAI in journalism, which found that the majority of participants valued being informed about the use of AI. Sophie highlighted the respondent’s feeling of entitlement to know whether they are communicating with AI, and the feeling of manipulation if that information is kept from them. Generally, Dutch citizens trusted AI less, and they highlighted emotions and empathy as typical human characteristics, which AI systems are less likely to exhibit.

Following these results, Morosoli suggested questioning the effectiveness of transparency labels and their effect on citizen’s trust in AI. For the media sector concretely, it may be beneficial to consider that users may have different needs concerning transparency, and to explore how to communicate the use of AI to them best. Sophie additionally argued that her survey has shown a correlation between user’s perception of AI benefits in news production and their attitude toward it, suggesting that transparency can not only secure trust between organisation and user, but also demonstrate positive aspects of AI. This would point to possible benefits of disclosing AI usage, and increased trust levels.

Industry Insights

Agnes Stenbom began with three optimistic things to add to the conversation from an industry perspective. She first highlighted the necessity of the AI Act to allow for experimentation in the field of media, both as of now and in the future.

Development is crucial for companies to stay relevant, and room for innovation should be accommodated by legislation without major administrative burdens. Although media is currently not categorized as high risk, Agnes stressed the importance of keeping the conditions for exploration with AI in media in mind as the AI Act evolves. Secondly, she underlined the advantages of the new transparency regulations put on developers of AI models for both users and developers as a key component of working towards responsible AI. Finally, Agnes was cautious about highly descriptive transparency requirements in user-facing communications, arguing that it might come too close to regulating journalism and dictating media operations. The industry should to some extent remain responsible for itself. As legislation processes are time-intensive, she stressed that the industry cannot always wait for all moving targets to settle.

Generally, Stenbom stressed the value of transparency and the media industry’s responsibility. She believes that the industry should not lean back on limited obligations but rather explore how it may bring transparency into practice. Many questions concerning transparency are not answered in the AI Act: Should the industry tell users about AI in practice? Should there be a standard? And are labels a good idea in the first place? Agnes believes that media organizations should not rely fully on the AI Act, especially as it is still in development, but rather seek dialogue and collaboration among each other to find solutions together.

AI, Journalism & Copyright

In his talk, Nicholas Diakopoulos proposed the value in considering how journalistic principles and standards are incorporated in the broader standards for responsible AI. It struck him that media is not classified as a higher-risk case under the AI Act despite the role generative AI plays in misinformation and the potential it has to disrupt democratic processes. This especially concerned him in the context of the upcoming 2024 presidential elections. He stressed the importance of looking ahead at technological risks that are currently invisible but may develop over time, as well as understanding the media’s use of these technologies and their impact on the media ecosystem.

Diakopoulos also referred to the recent lawsuit in which the New York Times sued open AI for copyright violations. In cases like these, the AI Act’s transparency regulations which require providers to disclose the source of their training data will likely add clarity. Nonetheless, the question of copyright and AI training data is a complicated one. On one hand, copyright and the protection of intellectual property should be considered, on the other hand, he also warns of the bias implications a politically uneven training data set may have.

On the note of speeding the legislation processes up due to impending elections and rapid technological advances, Helberger pointed toward the necessity of it as legal groundwork laying out greater lines for the future. Furthermore, the AI Act itself is not necessarily useful in cases like the New York Times lawsuit, as it would only apply in the European Union. Nevertheless, it provides a first look into future AI-specific legislation.

Looking Forward

Closing the debate, it is worth noting that although not exhaustive, the AI Act puts a spotlight on issues that go beyond AI itself. Matters of transparency, copyright, bias, and responsible use of technologies are relevant in the media ecosystem and will continue to be so as technology progresses. Looking forward, it will be important to keep dialogues between industry, academia, and institutions open, to revise and look ahead.

AlgoSoc and the AI, Media and Democracy Lab thank all panelists and attendees for joining the discussion on the implications of the AI Act on the media ecosystem, and invites to future panel discussions on the matter.

More results /

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

Je medisch dossier inladen in nieuwe functie ChatGPT? Denk 10.000 keer na

By Natali Helberger • January 19, 2026

By Roel Dobbe • November 24, 2025

By Roel Dobbe • November 12, 2025

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

Combatting financial crime with AI at the crossroads of the revised EU AML/CFT regime and the AI Act

By Magdalena Brewczyńska • January 16, 2026

By Sabrina Kutscher • July 02, 2025

By Natali Helberger • March 06, 2025

By Maurits Kaptein • June 06, 2025

By Leonie Westerbeek • November 22, 2024

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

Clouded Judgments: Problematizing Cloud Infrastructures for News Media Companies

By Agustin Ferrari Braun • January 29, 2026

By Fabio Votta • November 05, 2025

By Ernesto de León • Fabio Votta • Theo Araujo • Claes de Vreese • October 28, 2025